Note: This is a work in progress article and may be updated regularly based on feedback.

There are many business analytical needs whose data is in the form of “State Workflow”. A state workflow consists of a set of states. There are a valid number of “State Transitions”, i.e. changing of one state to another. The state transitions happen by some event. There can be a valid number of events responsible for state transition.

The typical business problem that conforms to above situation is “Sales Pipeline” analysis. The typical queries that need to be resolved are:

- How long it took to move from one state to another?

- How many items moved from one state to another?

- How much time spent in each state for a given period range?

- What is the count of items in a given state for a period range vs another period range?

- What is the aggregation of a related attribute with reference to state?

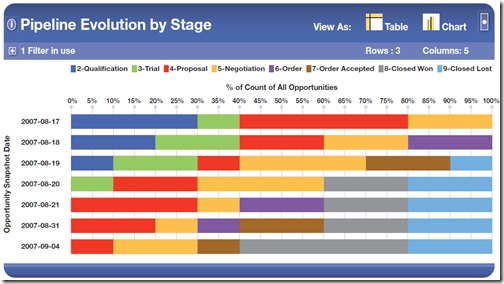

One typical analysis as presented by below graph is that for each day, what is the "% of Count of All opportunities" in each stages of sales pipeline.

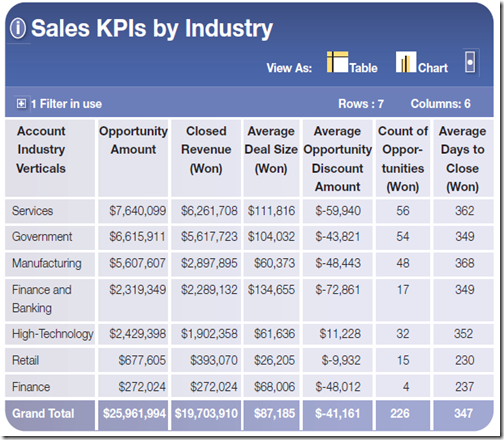

Or analyze the data using additional dimension such as "by Industry"

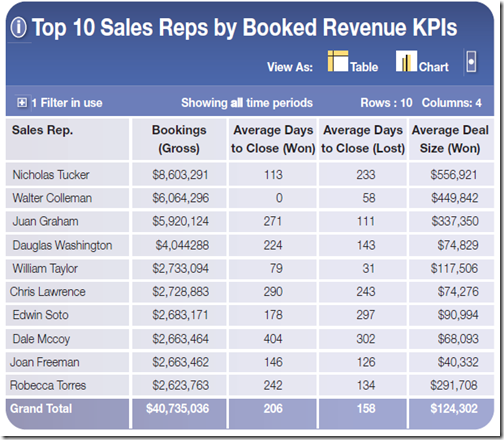

Or by "Booked Revenue"

The question is how we design our OLAP system to do such kind of analysis. Well, the trick lies in creating an intelligent fact table where we capture many of details at the time of ETL process itself rather than importing the transaction system "AS-IS" and hoping that some OLAP magic wand would answer all of our queries.

Let us understand it with some simple example which needs similar kind of analysis. In today’s rising gasoline cost, what better example is to understand how I drive or use my car?

If you think about operating a car, you will immediately think of several states that could be modeled in a workflow. Here are the states that I’ve chosen for this example:

- Not Running: In this state, you are in the car but the engine is not running.

- Idling: You’ve now started the engine, but you’re not moving.

- Moving Forward: The car is moving forward.

- Moving in Reverse: The car is moving in reverse.

- Done with the Car: You are finished with the car.

There are many other states that you could model, but this list is enough to provide a substantial example. The next step is to identify the events that can occur while you are in each state.

Below table lists the events that are allowed for each state, along with the planned state transitions as each event is handled.

(Well, I still drive a manual gear car, for the sheer pleasure of it)

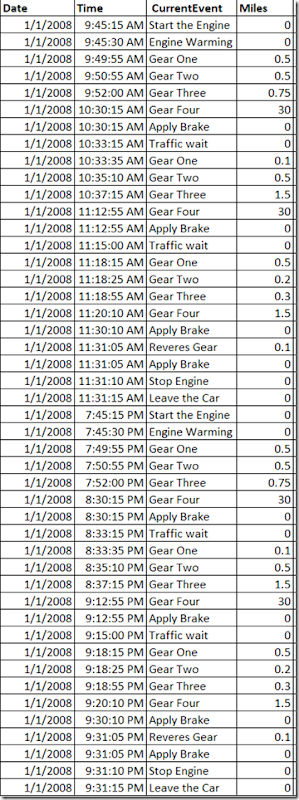

Lets look at our typical transactional system. Lets say I have an advanced tracking system installed in my car which records the states and events in a file. Typically, the transactional data recorded is very simple in the following structure:

Now, the typical analytical questions to understand my driving habits are:

On a given day, month or year:

- How many miles I drive in “Moving Forward”+ “Moving in Reverse” or individual state?

- How many times I “Apply Brake” while in “Moving Forward” state?

- How many miles I drive in “Gear Four” event in “Moving Forward” state? (higher the gear, better is the mileage)

- How much time is spent in “Idling” state for “Traffic Wait” event? (higher the time, higher is the fuel wastage)

- How many times, I do “Start the engine” event from “Not Running” state?

- How many times, I do “Beep Horn” even? (Am I compulsive honker?)

- How many times I change gears while in “Moving Forward” state?

- What is my average speed per trip?

- What is my average speed per trip while in “Moving Forward” state?

- Many more you can think of.

To answer the above analytical questions from our transactional data presented above is difficult for the end users. If a cube is created directly on the above transactional data, the MDX queries would be even more difficult. The point is, we want our end users to do more of analysis rather than spend effort on arranging the data prior to analysis.

The trick is to setup a ETL system which produces a synthesized fact table for data analysis. Lets take the below fact table which presents the same transactional data provided above:

From the above synthesized fact table, lets review if we can get the answers of our typical analytical questions to understand my driving habits. I think it should answers all of them.

We can create an OLAP cube on top of this fact table and using aggregation functions, answer the same analytical questions using MDX queries or expose the data to some OLAP client. Well, this is a topic for some other day.

Source Click Here.

Post a Comment